I had one of the most satisfying Saturday last weekend and that feeling is the reason I'm writing a blog after almost a year.

I often come across the quote "If someone offers you an amazing opportunity and you are not sure you can do it, say yes - then learn how to do it later." by Richard Branson, and what I did was exactly that. Being a WiDS Mumbai Ambassador for two years in a row now, I sometimes can't believe how much exposure and confidence it has given me just by making use of the opportunity at the right time.

WiDS - Women in Data Science with a mission of INSPIRE. EDUCATE. SUPPORT has created a very huge platform for women in this field to come together and spread the knowledge they have and take pride in sharing what they know which I believe is the best way of feeling good about yourself and your capabilities. It is just a small networking session at the conference that it takes to know about others perspective and share what you know just to realise when all these thoughts and ideas come together what wonders it can make.

Here in WiDS Mumbai, we realised that although we are doing justice to INSPIRE and SUPPORT through our annual conference, we can do more to EDUCATE to make the mission complete. That's when Khushboo, my co-ambassador pushed me and told me to take the first workshop as part of WiDS Mumbai for students at her alma mater and I couldn't be any more thankful to this opportunity that made me realise that I genuinely enjoyed taking the workshop for the whole of 2 hours.

My workshop was a hands-on one where I started first with a brief on supervised and unsupervised learning approaches and then came to my topic of neural networks. I started by first explaining what a neuron is and how it functions and what is its activation function or what do you mean by it and then gave a very high-level overview on how a neural network can learn through its weights and bias adjustments and just scratched the surface of back-propagation algorithm and gradient descent algorithm.

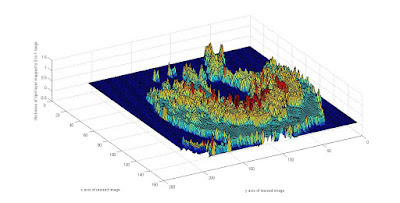

In order to get the intuition right about a neural network among the students I made them code along with me a simple neural network that learns a sine function, and at first I purposely selected parameters that would end up in an overfitting scenario that helped me explain this very important concept. Next, I took up a brief on Convolutional Neural Network and its layers (convolution, pooling, dropout, flatten, regular nets) that was also implemented for the very simple digit recognition task using MNSIT dataset. I, of course, used the Keras API and its functions to build the neural network which is very easy to understand and made sure that I explained all the arguments used like a loss function, epoch, batch size, optimizer and so on.

Towards the end, we had a discussion on various applications of CNN and I also demonstrated one application that was the code I did for WiDS Datathon 2019 on the oil palm plantation problem statement using satellite images that was hosted on Kaggle where I could introduce two other concepts of dealing with unbalanced dataset and how useful transfer learning can be because I had used a pre-trained CNN model in my code. Here is the link to my workshop notebook: https://github.com/WiDSMumbai/WiDS_Workshop/blob/master/workshop.ipynb

At the end of the workshop I was glad that I could cover all that I prepared in dot 2 hours and was also happy that I could cater to all the doubts asked in between and just to know out of my curiosity that how much the students really understood, I did ask a few questions in between and their chorus absolutely right answers definitely made me smile instantly.

Thank you for reading till the end, and I hope to take more workshops in future.

I'd also like you all to read my co-ambassador Julian's blog on WiDS Mumbai 2019 that highlights the 9 amazing speakers we had https://medium.com/@jsj14/wids-mumbai-2019-bacbf0b673cf and you can find their presentations here.

See you in our future events :)

I often come across the quote "If someone offers you an amazing opportunity and you are not sure you can do it, say yes - then learn how to do it later." by Richard Branson, and what I did was exactly that. Being a WiDS Mumbai Ambassador for two years in a row now, I sometimes can't believe how much exposure and confidence it has given me just by making use of the opportunity at the right time.

WiDS - Women in Data Science with a mission of INSPIRE. EDUCATE. SUPPORT has created a very huge platform for women in this field to come together and spread the knowledge they have and take pride in sharing what they know which I believe is the best way of feeling good about yourself and your capabilities. It is just a small networking session at the conference that it takes to know about others perspective and share what you know just to realise when all these thoughts and ideas come together what wonders it can make.

Here in WiDS Mumbai, we realised that although we are doing justice to INSPIRE and SUPPORT through our annual conference, we can do more to EDUCATE to make the mission complete. That's when Khushboo, my co-ambassador pushed me and told me to take the first workshop as part of WiDS Mumbai for students at her alma mater and I couldn't be any more thankful to this opportunity that made me realise that I genuinely enjoyed taking the workshop for the whole of 2 hours.

My workshop was a hands-on one where I started first with a brief on supervised and unsupervised learning approaches and then came to my topic of neural networks. I started by first explaining what a neuron is and how it functions and what is its activation function or what do you mean by it and then gave a very high-level overview on how a neural network can learn through its weights and bias adjustments and just scratched the surface of back-propagation algorithm and gradient descent algorithm.

In order to get the intuition right about a neural network among the students I made them code along with me a simple neural network that learns a sine function, and at first I purposely selected parameters that would end up in an overfitting scenario that helped me explain this very important concept. Next, I took up a brief on Convolutional Neural Network and its layers (convolution, pooling, dropout, flatten, regular nets) that was also implemented for the very simple digit recognition task using MNSIT dataset. I, of course, used the Keras API and its functions to build the neural network which is very easy to understand and made sure that I explained all the arguments used like a loss function, epoch, batch size, optimizer and so on.

Towards the end, we had a discussion on various applications of CNN and I also demonstrated one application that was the code I did for WiDS Datathon 2019 on the oil palm plantation problem statement using satellite images that was hosted on Kaggle where I could introduce two other concepts of dealing with unbalanced dataset and how useful transfer learning can be because I had used a pre-trained CNN model in my code. Here is the link to my workshop notebook: https://github.com/WiDSMumbai/WiDS_Workshop/blob/master/workshop.ipynb

At the end of the workshop I was glad that I could cover all that I prepared in dot 2 hours and was also happy that I could cater to all the doubts asked in between and just to know out of my curiosity that how much the students really understood, I did ask a few questions in between and their chorus absolutely right answers definitely made me smile instantly.

Thank you for reading till the end, and I hope to take more workshops in future.

I'd also like you all to read my co-ambassador Julian's blog on WiDS Mumbai 2019 that highlights the 9 amazing speakers we had https://medium.com/@jsj14/wids-mumbai-2019-bacbf0b673cf and you can find their presentations here.

See you in our future events :)